Introduction

Compared to other species, Homo sapiens have some major limitations. Examples abound of animals with better vision, hearing and olfaction; greater strength or agility, that can run, climb or swim faster; and have anatomical weapons such as claws, teeth and venom, with greater lethality.

Our non-technological achievements such as the arts and literature have clearly materially contributed to making us who and what we are. Nevertheless, it is our mastery of technology over the last 300 000 years1 that have made us the current dominant species on this planet.

These accomplishments are symbiotic: arts, such as sculpture, have had centuries of technological development, while technical achievements such as ships and weapons often have their own aesthetic qualities, even without additional adornments that do not contribute to their functionality.

Likewise, medicine has both technological and non-technological qualities. Comparatively recent anatomical, physiological, biochemical and other scientific discoveries have led to major technical advances in clinical care that can significantly enhance our quality of life. Yet good clinical practice remains utterly dependent on the emotional, social, spiritual and other non-technical interactions between patients and their caregivers.2

This article describes the beginnings of three of what are arguably, for better and for worse, the greatest accomplishments in human history: weapons, ships and medicine. The timeframe covers the Palaeolithic (3 300 000–12 000 BCE) and Neolithic (12 000–4500 BCE) Periods, followed by the Copper (6500–1000 BCE) Bronze (3500–300 BCE), and Iron (1500 BCE– 800 CE) Ages.

While acknowledging the contributions to these among other technologies from Africa, Asia and the Americas, this and subsequent articles are focused mostly on those from Europe, given their eventual relevance to Australian military maritime medicine.3

Prehistoric weapons

A broad generalisation of nomadic hunter-gatherers is that, to a certain extent, everyone has more or less the same skills to essentially make the same items, such as the clothing and tools needed to survive.4 The lack of unique possessions between and within clans during the Palaeolithic and Neolithic Periods, combined with the dispersed population distribution5 and itinerant existence inherent to hunting and foraging, suggests they had limited opportunities or need for trade. It also seems likely that their daily struggle to simply stay alive left little spare capacity for fighting other clans.

These considerations suggest that inter-clan conflicts during this time only occurred as a result of life-threatening water and/or food shortages, and that any ‘fighting’ was mostly ritualistic, with few combat casualties.6

These inferences are supported by the fact that the oldest archaeological evidence of weapons being used on other people rather than animals is only dated to about 11 000 BCE.7 This is despite hominids first using weapons up to five million years ago,8 while the oldest weapons to be identified as such (a collection of wooden spears) are dated from 300 000 to 400 000 BCE.9 The earliest evidence of bows and arrows is from 71 000 BCE,10 followed by weapons such as clubs,11 axes,12 and slingshots.13

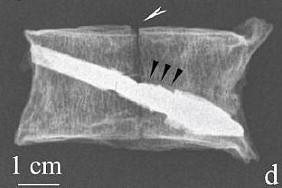

Wooden spear, found Schoningen Germany, dated c400 000 BCE14

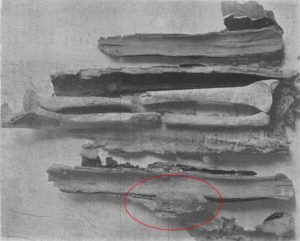

Jebel Sahaba cemetery, northern Sudan, dated c11 000 BCE, 17,18 The pencils indicate stone arrowhead marks on the bone, which sugget that the victims were killed by archers

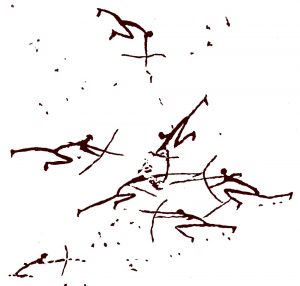

Cave art, Cueva del Roure, Morella la Vella, Castellón, Valencia in Spain, dated c8000 BCE.19 Note the three archers on the right under attack by another four archers on

the left… or perhaps vice versa.

The development of animal domestication from 10 000 BCE led to pastoral societies that migrated seasonally depending on the availability of feed and water for their herds.20 Although raiding each other’s animals would have increased the potential for conflict, the ongoing lack of differentiated possessions suggests such events still only occurred when their stock ran short.

While gold, silver and iron are found naturally in their metallic form (albeit the latter only as meteorites), the discovery that heating certain ores liberated other metals such as mercury, tin, lead and copper was made independently in modern Syria and central America, in the former case in c6500 BCE.21 Producing these metals required people to settle where the ores were located, and additional capacity by those producing the food to feed those who mined and processed them. The latter requirement had already been met by the advent of sustainable agriculture from 12 000 BCE.22 These advances increased differentiation as to who possessed what, thereby creating the first trading opportunities within and between settlements, while also increasing the scope for conflict.

By 3500 BCE, it had been discovered that a 90:10 per cent alloy of copper and tin made bronze, which is harder and less brittle than either metal alone. This led to the gradual replacement of wooden and stone weapons with ones made of copper and later bronze. It also facilitated the development of new edged weapons from c2000 BCE, such as swords and daggers.23

Top to bottom: axe with yew handle and copper head, incomplete bow stave and flint knife. All found Ötztal Alps, Italy, dated 3400–3100 BCE.26’27’28

Late Bronze Age sword, found Thames River,

Richmond UK, dated 900–800 BCE.31

Throughout the Neolithic Period and the Copper / Bronze Ages, the scarcity and hardness of meteoric iron limited its use to mostly ornamental purposes,35 while the inability to generate the high temperatures required to produce metallic iron from ore precluded its large-scale production.36 The technology that overcame the latter developed independently in multiple locations worldwide, beginning c1500 BCE in the Middle East. It entailed using charcoal to heat the ore and produce carbon monoxide, the combination of which chemically reduced the iron to a metallic ‘bloom’ form, which was then repeatedly heated and hammered to remove impurities.37

However, the resulting ‘wrought’ iron is actually softer than bronze. It was not until c900 BCE that it was found that reheating iron with additional charcoal transfers carbon to its surface. If it is then rapidly cooled in water or oil, the result is a tempered hard steel surface over a flexible iron interior. This discovery led to iron displacing bronze, in particular for edged weapons that could be made longer and kept sharper.38 Their effectiveness for hand-to-hand combat in particular went unchallenged until the first handheld firearms were developed in China in the 13th century CE.39

Prehistoric ships

The development of trade between the first farms, hamlets and villages was initially limited by the carrying capacity of individual people (no more than 40–50kg each), which would only have been suitable for small, lightweight and/or valuable merchandise.43 It was not until 4000 BCE that oxen were first harnessed to pull sledges, while ponies and donkeys were not domesticated until 1000 years later.44 Even with the first wheeled carts from c3150 BCE,45 transporting large amounts of bulky and/or weighty commodities overland remained inefficient and expensive until the development of the first railways.46

Hence, the technological developments that led to the first vessel to achieve sustainable and controlled waterborne travel with a person (and later cargo) aboard, arguably rank with those that resulted in the 1903 Wright Flyer47 and the Vostok and Mercury spacecraft.48 These developments were most likely driven by the fact that even now, besides their effectiveness for fishing, ships49 remain the most efficient means of transporting large and heavy commodities over long distances.50

Like weapons, watercraft technology developed independently in multiple locations worldwide, based on the local materials available. As examples, prior to 8000 BCE hunters in Scandinavia made boats from reindeer skin on antler frames,51 while Egyptian boat builders used papyrus reeds.52

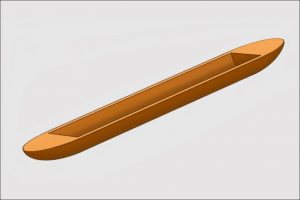

Nevertheless, subsequent advances entailed some elements of parallel evolution, such as dugout boats from hollowed-out logs. Using northern Europe as an example, the oldest known dugout is dated c8000 BCE.53 By c3000 BCE, European dugouts had developed a ‘spoon’ bow and a separate transom piece bevelled and tied in place to made the stern watertight. The limitations inherent to the width of the parent log first led to planks being lashed in place above the gunwale to increase freeboard, and later to the parent log being split in half and planks added between to increase beam.54

These vessels were all propelled with poles or paddles until the development of oars and rowlocks, at times ranging from 6000 BCE in Korea,55 to c200 BCE in Scandinavia.56

Notwithstanding their greater carrying capacity compared to people, pack animals or carts, the small size and general lack of seaworthiness of these watercrafts would have restricted their operations to local rivers, lakes and estuaries. Even so, the earliest evidence of Mediterranean seafaring (dated to 10 000–3000 BCE), are flakes of obsidian found in mainland Greece that are unique to the island of Melos, which is 50 nautical miles offshore.57

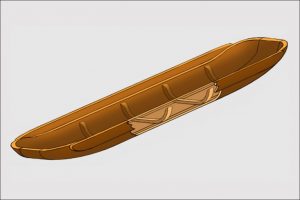

Diagrammatic representation of a northern European dugout, c8000 BCE.58 Note the quality of the workmanship would have reflected the stone tools used at the time and

was therefore far less neat. (Author)

Diagrammatic representation of a northern European dugout, c3000 BCE.59 Note the spoon bow and transom stern. (Author)

Diagrammatic representation of a northern European dugout, c2700 BCE.60 Note the spoon bow, transom stern and planks added to increase freeboard and beam. (Author)

Diagrammatic representation of a northern European dugout, c1300 BCE.61 The cutaway shows how the parent log has been split in half, with a plank inserted between to increase beam and transverse ribs to hold them together.

Also note the lack of a keel. (Author)

Prehistoric medicine

While bones from the Palaeolithic and Neolithic Periods indicate human lifespans of only 30 to 40 years, the paucity of any other archaeological evidence means that the knowledge of prehistoric medicine is rather speculative.62 Even so, it is known that human life expectancy at birth was limited by high peri- and neonatal mortality rates, as this remained the case until comparatively modern times.63 Survivors would then have been subject to the health risks inherent to hunter-gatherer and pastoral nomadic societies, in particular communicable disease and trauma.

From a modern occupational and public health perspective, a communicable disease outbreak within a clan could have been devastating, not only because of the morbidity and mortality associated with the outbreak itself, but because of the second-and third-order effects regarding the clan’s ability to move, forage and hunt.64 Even so, any pandemic threat to the worldwide human population was limited by the scattered nature of its constituent clan groups and the sparse contacts between them.65

While the agglomeration of people into the first hamlets, villages and towns would have eliminated these indirect consequences, their closer contacts in greater numbers would have increased their communicable disease risk.66 The role of watercraft as a communicable disease vector therefore probably came early in human history and, in combination with aircraft, remains extant today.67,68

The apparent absence of a clearly discernible cause for disease outbreaks otherwise probably made them generally inexplicable, except as an adverse spiritual intervention. This highlights the importance of the spiritual interactions between medical patients and their caregivers, in addition to their emotional and social support.

From a modern clinical perspective, actively treating infectious diseases and other medical conditions was limited by the inability to accurately diagnose. Even when this could be achieved (such as during an epidemic), caregivers still needed to be able to identify the right therapeutic agent(s)—where these existed—and then ascertain the right dose.

Notwithstanding thousands of years of empiric trial and error, this process would have been complicated by the plethora of candidate plants in particular, their high toxicity in some cases, and the quantities required to achieve therapeutic effects beyond simply inducing vomiting and/or diarrhoea in most others. Achieving success was also problematic until there were sufficient living grandparents from 30 000 BCE, to maintain a living knowledge base within each clan, as to which agents were effective for which conditions in what dose.69

Therefore, it seems reasonable that a key reason for today’s limited knowledge of prehistoric therapeutic agents relates to their efficacy: in short, only the ones that definitely worked for readily diagnosable medical conditions remained in use long enough to be documented in written form.70 While many of the remaining agents failed with respect to being actively therapeutic, their relative non-toxicity also meant they were unlikely to do much harm. It therefore seems likely that these agents became the basis of folklore-based treatments until comparatively modern times. This arguably remains the case regarding at least some complementary medicines.71

As previously indicated, the size and speed of many animals would have made them highly dangerous to hunt with the weapons available.72,73,74,75 Plant foraging undoubtedly posed its own hazards with respect to competing with larger/faster animals and/ or being stalked by ambush or pack predators.76,77 To these hazards can be added ample scope for slips, trips, falls and crush injuries, especially in rough terrain.78

The risks to each clan posed by individuals with injuries that rendered them unable to travel were probably similar, albeit perhaps less dire, than those posed by communicable disease. The greater threat to the clan would have come from the accumulation of members with chronic impairments and injuries, who were rendered temporarily or permanently unable to hunt or forage.

To this end, the overt connection between cause and effect, particularly regarding uncomplicated cuts, abrasions, limb fractures and perhaps even dental conditions, is likely to have facilitated identifying some effective surgical treatments via the aforementioned empiric methods.79,80 Even so, head, spinal, chest and abdominal injuries in particular remained almost universally fatal until modern times. Likewise, wound complications such as infections (especially from retained foreign bodies), gas gangrene and tetanus would have had very high morbidity and mortality rates. Exceptions to the contrary from this time are therefore highly remarkable.

Bark splints applied to a left midshaft radial / ulnar fracture of a 14-year-old girl, Fifth Egyptian Dynasty (2498–2345 BCE).82 The circled area is blood clot adhering to the linen over the splint, implying these fractures were

compound.

Left (A): Posterior view, healed proximal fracture left humerus with displacement, Nubian Egypt, c1539–1075 BCE83

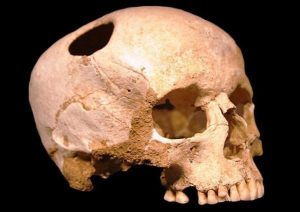

Trepanned skull, Omdurman Sudan, 4000–5000 BCE.84 Note the new bone formation around the edge of the hole in the cranium, suggesting the patient survived. Although

numerous such cases have been found, the reason(s) for this procedure on apparently healthy individuals remains unknown.

Conclusion

The relationship between weapons, ships and medicine have been closely linked since late prehistory. A key theme to their development pertains to their independent development in multiple regions worldwide. This most likely represents the extent to which the watercraft that became the only means of communication between them for hundreds of years, were initially only suitable for local rivers, lakes and estuaries.

While the first weapons up to five million years ago allowed hominids to progress from scavenging to hunting, current archaeological evidence indicates that inter-human conflict only began about 11 000 years ago. This suggests the extent to which the struggle to survive was hard enough without fighting each other (except in dire environmental circumstances), as well as the likelihood that no-one had anything worth trading or fighting for that they could not make themselves.

People with illnesses or injuries that limited or prevented them from participating in the hunting and/or foraging activities necessary to survive, would have had significant second- and third-order adverse effects on the rest of their clan. From a modern occupational and public health perspective, the breadth and depth of these effects appear to lack prominence, at least in the current non-specialist palaeontological literature. Even so, it seems the benefits to each clan as a whole with respect to actively caring for their disabled members outweighed any of the somewhat more Darwinian alternatives: were this not so, the latter would probably have a far greater place in modern society.86

The empiric methods available to identify effective surgical treatments for uncomplicated cuts, abrasions and limb fractures were probably relatively straightforward, especially after there were sufficient living grandparents to maintain an ongoing knowledge base. Even so, head, spinal, chest and abdominal injuries remained almost universally fatal until modern times, along with very high morbidity and mortality rates from wound complications.

The first farming communities in 12 000 BCE, followed 2 000 years later by the first domesticated animals, allowed Bronze Age people to settle where they could mine and process metal ores from 6500 BCE. Although this would have eliminated some of the medical hazards inherent to hunter-gathering, it is likely to have exacerbated others such as communicable disease, the aetiology of which remained generally inexplicable until quite recently. The inability to accurately diagnose, or to match diagnosis to treatment, would have generally limited the latter to non-technical emotional, social and spiritual support.

The first settlements would also have led to differences as to who possessed what, resulting in commodities being traded within and between them. It seems likely this provided the impetus for developing the first watercraft, not only for fishing but also to transport trade goods in greater quantities than could be achieved otherwise. It probably also led to people with nothing to trade seeking to take what they needed or wanted by force, while their prospective victims sought to defend themselves and their commodities from such attacks.

These developments initiated a cycle: increasing trade drove the need for larger and more efficient ships to transport commodities and for better weapons for defence or attack, which in turn enabled further trading opportunities. Thousands of years later, this cycle continues to remain relevant to the economic wellbeing of many nations, including Australia.87

Future articles will describe how the expansion of this cycle worldwide from Europe from the end of the 15th century frequently led to the near or total annihilation of the participating ship’s crews. It was not until the 18th century that medicine’s role as an operational enabler for this cycle was first recognised. Besides facilitating the European settlement of Australia, this recognition proved crucial to British naval dominance during the 19th century,88 as well as Allied military success in two world wars among other 20th century conflicts.89,90

Author

Dr Neil Westphalen graduated from Adelaide University in 1985 and joined the RAN in 1987.He is a RAN Staff Course graduate and a Fellow of the Royal Australian College of General Practitioners, the Australasian Faculty of Occupational and Environmental Medicine, and the Australasian College of Aerospace Medicine.He also holds a Diploma of Aviation Medicine and a Master of Public Health.

His seagoing service includes HMA Ships Swan, Stalwart, Success, Sydney, Perth and Choules.Deployments include DAMASK VII, RIMPAC 96, TANAGER, RELEX II, GEMSBOK, TALISMAN SABRE 07, RENDERSAFE 14, SEA RAIDER 15, KAKADU 16 and SEA HORIZON 17.His service ashore includes clinical roles at Cerberus, Penguin, Kuttabul, Albatross and Stirling, and staff positions as J07 (Director Health) at the then HQAST, Director Navy Occupational and Environmental Health, Director of Navy Health, Joint Health Command SO1 MEC Advisory and Review Services, and Fleet Medical Officer (2013-2016).

Commander Westphalen transferred to the Active Reserve in 2016.

Disclaimer

The views expressed in this article are the author’s and do not necessarily reflect those of the RAN or any other organisations mentioned.

Corresponding Author: Neil Westphalen neil.westphalen@bigpond.com

Authors: N Westphalen 1,2

Author Affiliations:

1 Royal Australian Navy Reserve

2 Navy Health Service, C/O Director Navy Health